- This event has passed.

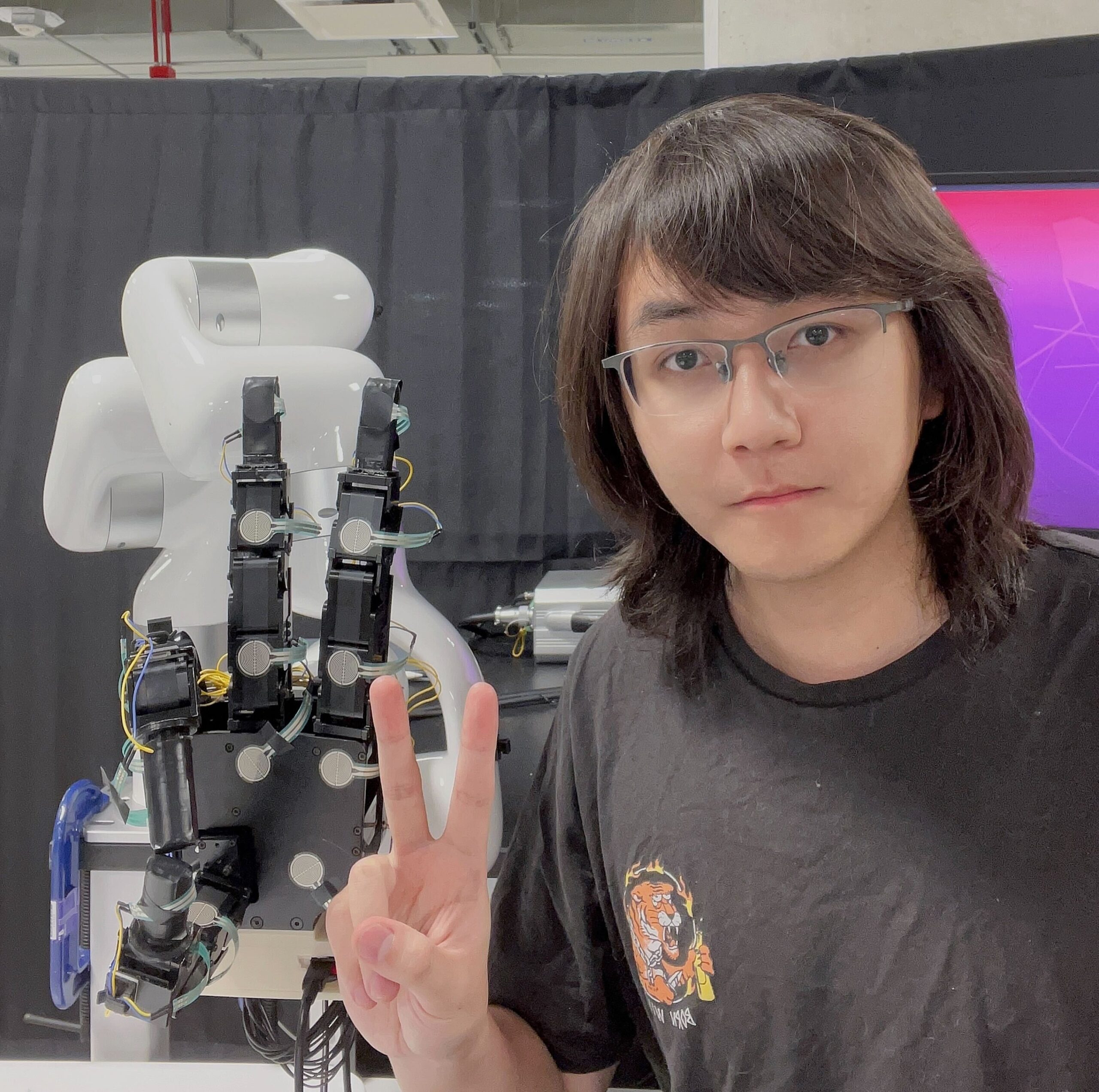

Fall 2025 GRASP SFI: Binghao Huang, Columbia University, “Scaling Touch: Flexible Tactile Skin for Dexterous Manipulation”

October 1 @ 3:00 pm - 4:00 pm

This was a hybrid event with in-person attendance in Levine 307 and virtual attendance…

ABSTRACT

Tactile and visual perception are crucial for fine-grained human interactions with the environment. Developing similar multimodal sensing capabilities for robots can significantly enhance and expand their manipulation skills. This talk presents a scalable tactile stack that couples flexible, large-area tactile skin with multimodal perception and simulation-driven learning. (1) Hardware. I will first introduce a low-cost, flexible tactile “skin,” outline the associated design choices, explain why I value it over other sensors in different contexts, and describe how I integrate it into the system to ensure high-quality tactile data. (2) Learning. Vision and touch have distinct natures yet are both important for robot decision making; I will explain how we encode tactile and visual data so that both contribute effectively to the decision-making process. (3) Scaling up Tactile Data. I will present two directions: (i) using portable tactile devices to collect large-scale real-world tactile data and leveraging this dataset to enhance policy learning, and (ii) leveraging tactile simulation to increase policy robustness. We use a real-to-sim-to-real pipeline that calibrates a GPU-parallel tactile simulation and uses RL fine-tuning to let policies explore with the small corrective “wiggling” behaviors required for tight-fit bimanual assembly.