Text by Jillian Mallon

Researchers in the GRASP Laboratory at the University of Pennsylvania are developing an algorithm for designing functioning robots through folding. The paper that details the design and code behind the project, Kinegami: Algorithmic Design of Compliant Kinematic Chains From Tubular Origami, was awarded an honorable mention for the 2023 IEEE Transactions on Robotics King-Sun Fu Memorial Best Paper Award. The project is a collaborative effort between two GRASP subgroups – the Sung Robotics Lab, which focuses on computational design for soft and origami robots, and Kod*lab, which focuses on modeling and control of bio-inspired robots – aiming to make robot design more accessible to the general public.

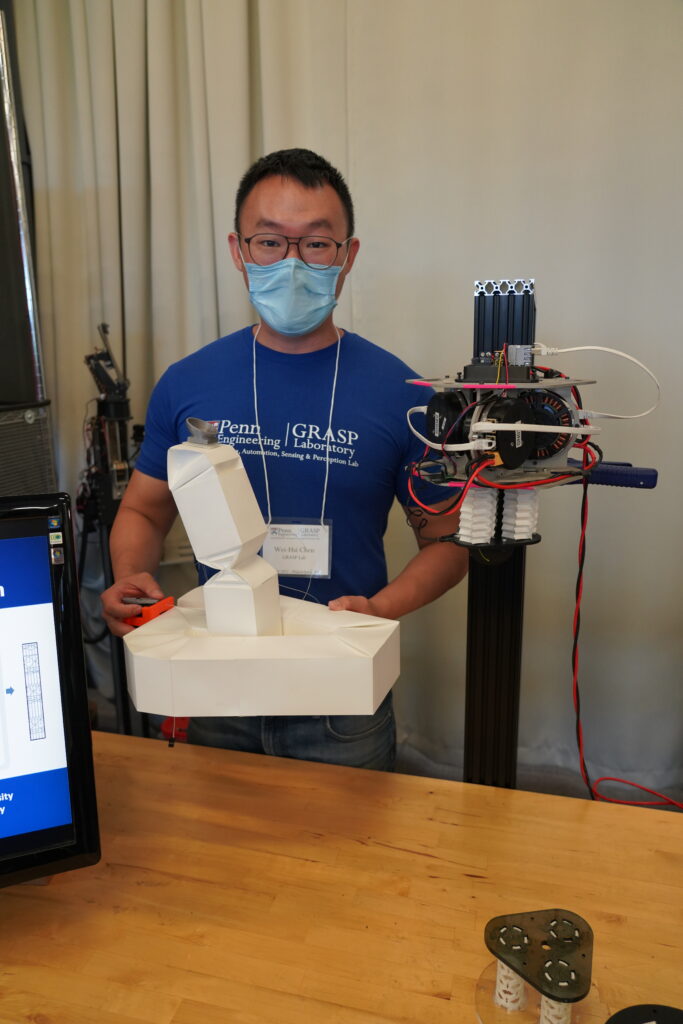

The motivation behind the Kinegami algorithm originated from a design project in which postdoctoral researcher Dr. Wei-Hsi Chen, co-advised in both groups, was using origami-inspired methods to create new lightweight and power-dense dynamical robots. “I became the bridge between Prof. Koditschek’s world of dynamic systems and Prof. Sung’s origami robots.” recalled Chen. “Eventually, our ideas moved from just building a part of the robot using origami up to a point that we asked, ‘Can we build any robot out of origami?’”

The co-authors worked together to design an algorithm that could compose origami joints and connectors together into a robotic structure. One key to this algorithm was to connect the problem of robot design to existing work in robot path planning. The other key was to design a library of origami patterns in order to turn a desired path into a 3D tube along that path.

“In the same way that robot paths help robots to move in 3D space, a robot design is a physical path through 3D space,” explained Sung, “so in order to design robot structures, we need to be able to design non-intersecting paths to connect joints and motors together.”

The input to the Kinegami algorithm is the Denavit-Hartenberg specification, which identifies the lines along which the joints rotate or translate. The algorithm identifies locations along these lines so that they can be connected by collision-free paths. The paths used are Dubins paths which can’t bend more sharply than the radius of the tube. The Dubins path is commonly used in robot literature for vehicles such as cars, boats, and UAVs to plan motion over time. Using the Dubins path for a design problem is a novel approach in this case.

“For rigid structures, moving joints along their axes of motion does not affect the motion of the robot, and so you can put them close or far from each other and still get the same function from the robot,” Prof. Sung said. “This means that it is always possible to space joints far enough apart physically to route Dubins paths and create a physically realizable design.”

Once the path is identified, the algorithm finds an origami crease pattern that folds into a tube that traces that path with joints at the appropriate locations. The joints and connecting tubes are all made of origami composed into one pattern.

The researchers chose a tube with polygonal base frames as the shape of their structure because it can be both strong and lightweight. “This is a serial chain mechanism, or mechanism with rigid bodies each connected with at most two joints,” described Chen. “Immediately what came to mind were bendy straws. You are able to bend it. It’s tubular-like and you can put a lot of them together to achieve different motions.”

Chen designed five different tube-shaped origami joints and connections that can be combined using the Kinegami algorithm to make the robot move translationally and rotationally. The first connection is a straight tube. The second connection, which Chen named the elbow fitting due to its similarity to pipe fittings used in plumbing, bends the direction of the tube in a circular arc. The third connection type, the twist fitting, changes the orientation of the polygonal base. The two origami joints are called the prismatic and revolute joints. The prismatic joint compresses and elongates the structure by acting as a Hookean spring and translating along a single axis while the revolute joint bends the structure by acting as a torsion spring and rotating around a single axis.

All of this initial research was documented in an initial draft of what is now the Kinegami paper. The co-authors iterated on the paper with the goal of making the final submission comprehensive to readers in a variety of disciplines.

“We spent a lot of time trying to write the paper in the sense that, if you’re a mathematician you can look at the math and you can understand. If you’re an engineer, you don’t need to look at the math. You can look at the graphics and you can understand. If you’re an undergrad engineer or you have basic training on how to build stuff, and you look at the video, you go, ‘Oh, this is how it works,’” explained Chen. “We tried different methods to make it understandable to different people.”

This approach as well as the research itself ultimately led to its success that earned it an honorable mention for the 2023 IEEE Transactions on Robotics King-Sun Fu Memorial Best Paper Award. The paper was published with open access as a result of the paper award, which the researchers see as a great opportunity for their work to reach a wider audience. This goal of accessibility is also a driving force for the future directions of this project.

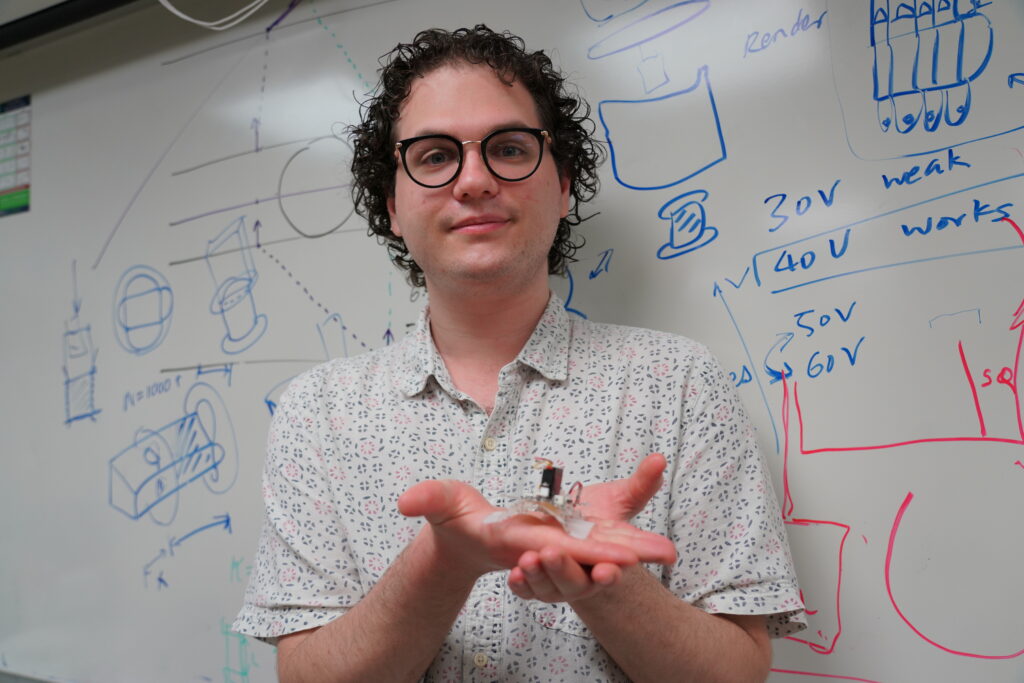

One such direction has been spearheaded by Computer and Information Science (CIS) PhD student Daniel Feshbach. Interestingly, the path that led Feshbach to the Kinegami algorithm is the inverse of Chen’s path. While Chen’s initial research focus was building robots before he branched out into the more computational side of robotics, Feshbach’s background was in computer science before joining Prof. Sung’s research group. This experience made Feshbach uniquely qualified to expand on Chen’s computational work.

Feshbach took the Kinegami program that Chen had developed in MATLAB and converted it into an open-source Python software, which will be presented at the 8th International Meeting on Origami in Science, Mathematics (8OSME) in July 2024, to make the platform more user-friendly and comprehensive.

“It wasn’t just a MATLAB to Python translation,” Feshbach clarified. “Now it’s object oriented and the code is more modular, interoperable, and easier to debug.” This means that it is also easier to build on, a feature that Daniel is taking advantage of to add new visualizations and interactivity to make the design process and the algorithm more intuitive to non-roboticists.

“The goal is that the only prerequisite knowledge you need is basic Python programming and familiarity with matrix multiplication and vector addition,” stated Feshbach. “Ongoing work right now is to get rid of even those prerequisites by making a fully graphically interactive user interface.”

When building the Python repository, Feshbach also added some new features to the software. Chen’s original MATLAB program visualized the joint patterns as the bounding sphere of each joint and the center line connection of the link. The Python version can now display the patterns as the tubes that the structure will be folded into and visualize how the structure would look or function when manipulated into different positions.

“It’s harder to understand what you’re looking at without showing the design as tubes. And we’re now showing users not just in what we call the ‘neutral configuration’, we’re visualizing the same design in multiple configurations, which we think will help non-expert users understand what they’re looking at,” Feshbach said. “I can look at the design and joint axes and imagine how it will look in different configurations, but that’s my expertise.”

Looking forward, this team has no shortage of additional ideas on how to even further expand the usefulness of this new tool.

Feshbach is working to expand the algorithm so that it is able to handle general robots that have more complicated structures than the chains in manipulator arms, and to expand the fabrication options beyond origami to 3D prints or other materials. “The big picture is working towards a future version of this software to design the structure of a robot in a way that lets the user visualize what it can and can’t do, then edit accordingly, and the software will tell them whether they can,” Feshbach stated. The group is planning to put the software to the test through an origami robotics program held jointly with the University City Arts League this coming year, where they’ll be able to “see whether the software can handle really wacky forms,” according to Prof. Sung.

Chen is testing the capabilities of the program by using it to construct functioning quadrupedal robots out of origami. He is calling the project DOQ, or Dynamic Origami Quadruped. “We think the work could be a new way to build robots. My ongoing works are all showcasing how you could use this to build actual robots,” Chen stated. “The DOQ project includes a procedure of how you would create your own robot with this algorithm.”

“Ultimately, these projects allow us to think more deeply about robots’ forms and functions,” said Sung. “If we can figure out how to make robot design more systematic, the results will tell us something about how to make better designs in the future, and how to bring what is currently a very specialized art form to a broader set of potential users.”

This project was funded by the Army Research Office under the SLICE MURI program grant #W911NF1810327 and by the National Science Foundation grants #1845339 and #2322898. Any opinions, findings, and conclusions or recommendations expressed in this material are those of the authors and do not necessarily reflect the views of the sponsors.

Featured People

PhD, ESE '22 - Postdoctoral Researcher, ESE

PhD, CIS

Alfred Fitler Moore Professor, ESE

Associate Professor, MEAM; Secondary faculty, CIS & ESE