Multimodal Proximity and Visuotactile Sensing with a Selectively Transmissive Soft Membrane

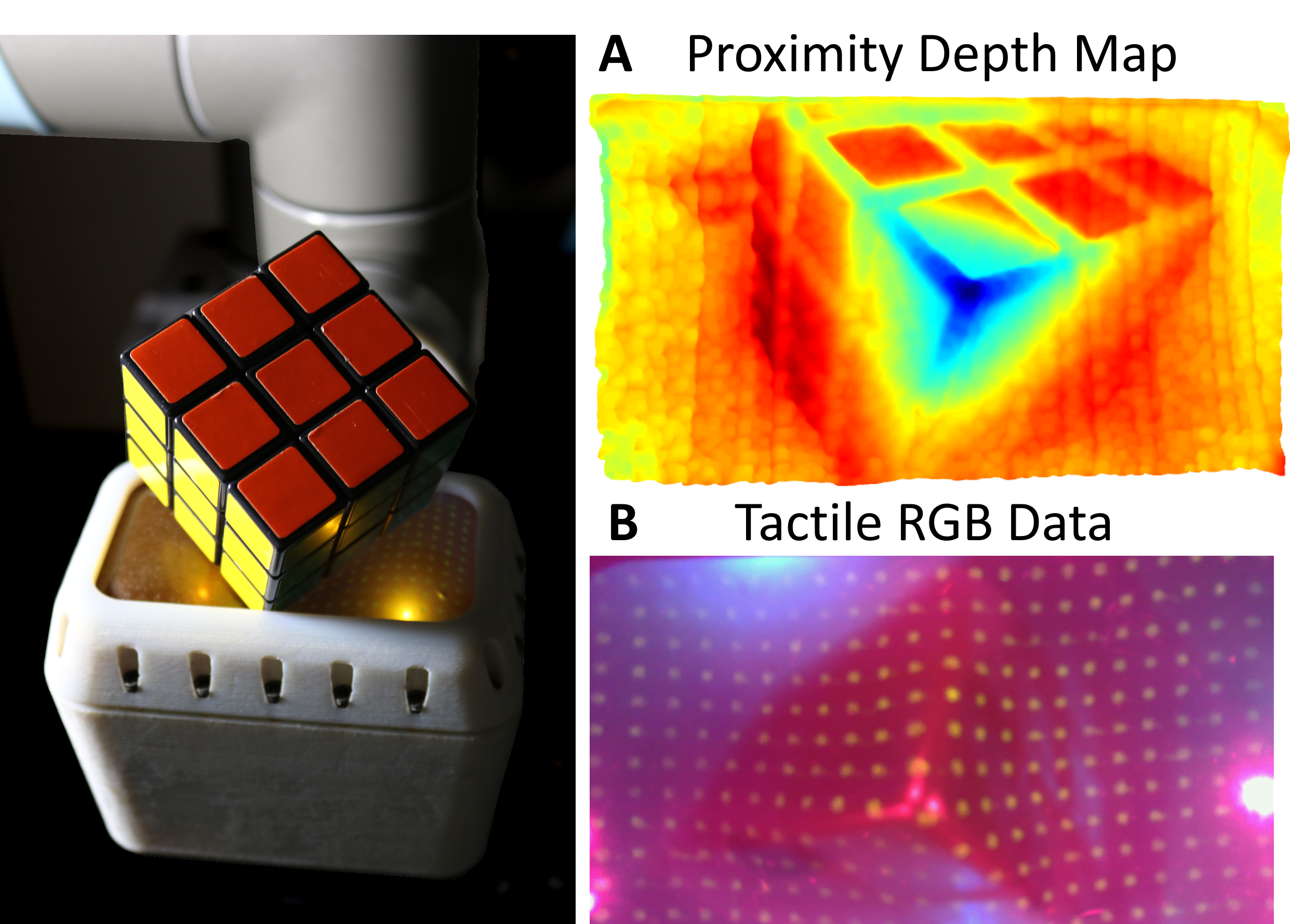

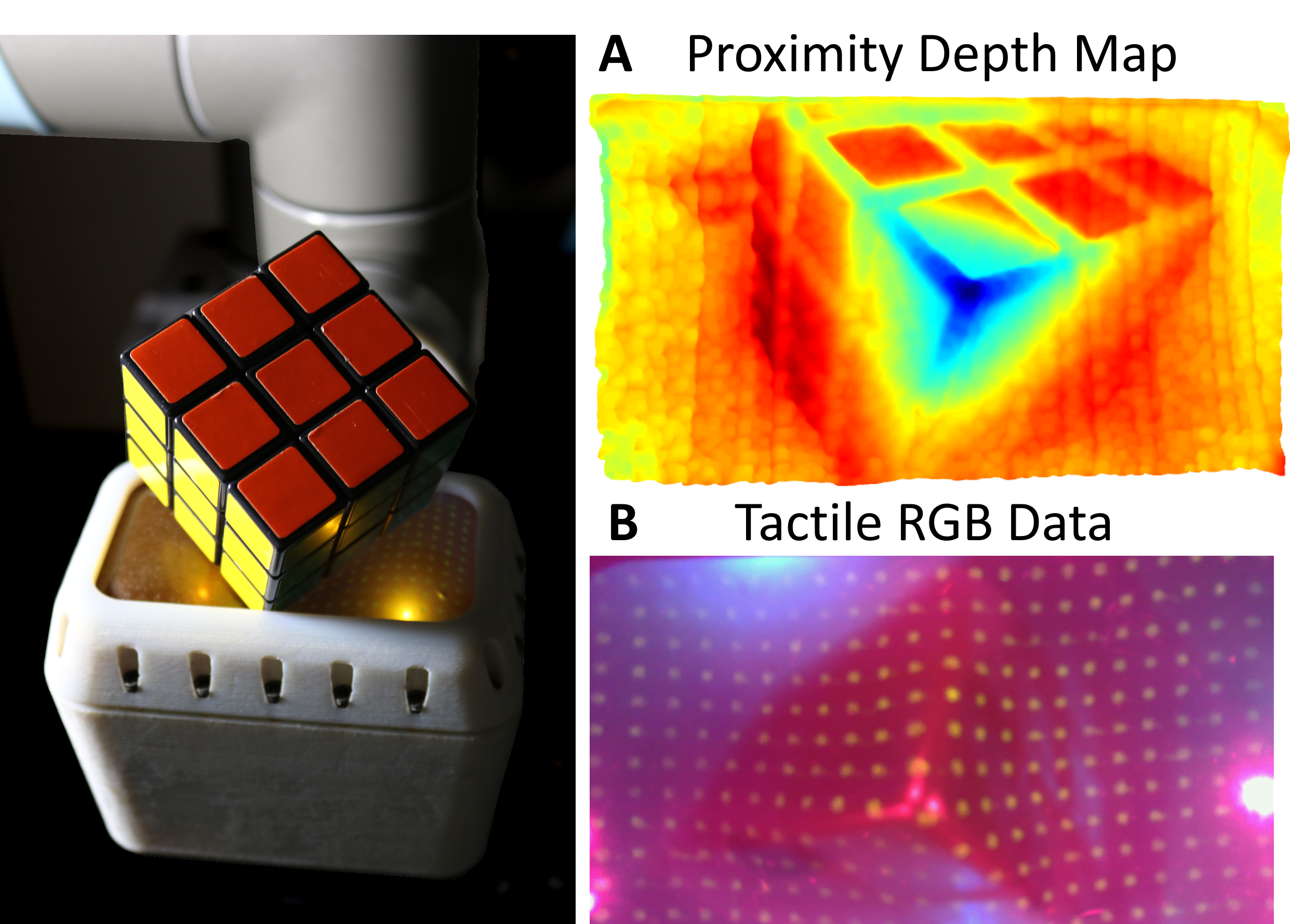

The most common sensing modalities found in a robot perception system are vision and touch, which together can provide global and highly localized data for manipulation. However, these sensing modalities often fail to adequately capture the behavior of target objects during the critical moments as they transition out of static, controlled contact with an end-effector to dynamic and uncontrolled motion. In this work, we present a novel multimodal visuotactile sensor that provides simultaneous visuotactile and proximity depth data. The sensor integrates an RGB camera and air pressure sensor to sense touch with an infrared time-of-flight (ToF) camera to sense proximity by leveraging a selectively transmissive soft membrane to enable the dual sensing modalities. We present the mechanical design, fabrication techniques, algorithm implementations, and evaluation of the sensor’s tactile and proximity modalities. The sensor is demonstrated in three open-loop robotic tasks: approaching and contacting an object, catching, and throwing. The fusion of tactile and proximity data could be used to capture key information about a target object’s transition behavior for sensor-based control in dynamic manipulation.

Assistant Professor, MEAM; GRASP Secondary Faculty Member (2020-2023) - Associate Professor, UW Madison

Director, GRASP Lab; Faculty Director, Design Studio (Venture Labs); Asa Whitney Professor, MEAM